Marek Suppa

Serverless deployment of (large) NLP models

#1about 9 minutes

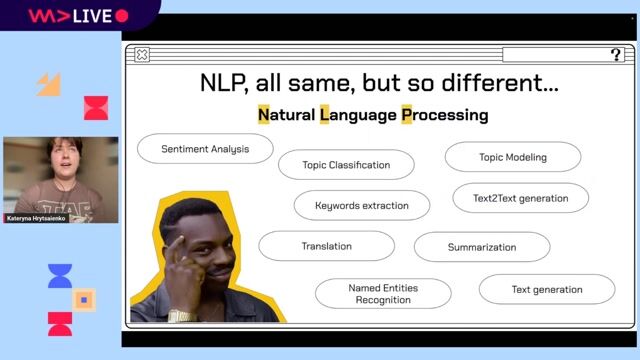

Exploring practical NLP applications at Slido

Several NLP-powered features are used to enhance user experience, including keyphrase extraction, sentiment analysis, and similar question detection.

#2about 4 minutes

Choosing serverless for ML model deployment

Serverless was chosen for its ease of deployment and minimal maintenance, but it introduces challenges like cold starts and strict package size limits.

#3about 8 minutes

Shrinking large BERT models for sentiment analysis

Knowledge distillation is used to train smaller, faster models like TinyBERT from a large, fine-tuned BERT base model without significant performance loss.

#4about 8 minutes

Building an efficient similar question detection model

Sentence-BERT (SBERT) provides an efficient alternative to standard BERT for semantic similarity, and knowledge distillation helps create smaller, deployable versions.

#5about 3 minutes

Using ONNX Runtime for lightweight model inference

The large PyTorch library is replaced with the much smaller ONNX Runtime to fit the model and its dependencies within AWS Lambda's package size limits.

#6about 3 minutes

Analyzing serverless ML performance and cost-effectiveness

Increasing allocated RAM for a Lambda function improves inference speed, potentially making serverless more cost-effective than a dedicated server for uneven workloads.

#7about 3 minutes

Key takeaways for deploying NLP models serverlessly

Successful serverless deployment of large NLP models requires aggressive model size reduction, lightweight inference libraries, and an understanding of the platform's limitations.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Matching moments

03:55 MIN

The hardware requirements for running LLMs locally

AI in the Open and in Browsers - Tarek Ziadé

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

05:03 MIN

Building and iterating on an LLM-powered product

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

01:06 MIN

Malware campaigns, cloud latency, and government IT theft

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

05:09 MIN

Why specialized models outperform generalist LLMs

AI in the Open and in Browsers - Tarek Ziadé

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

Featured Partners

Related Videos

34:21

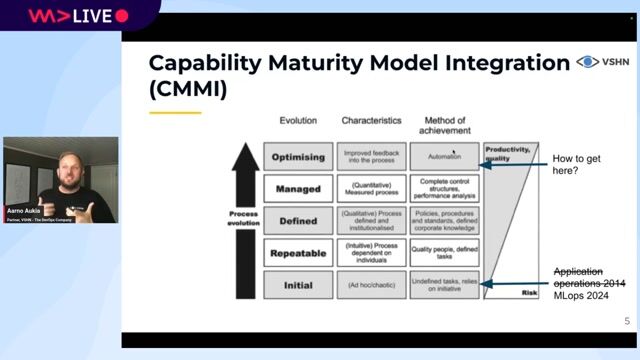

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

31:25

31:25Unveiling the Magic: Scaling Large Language Models to Serve Millions

Patrick Koss

39:58

39:58Leverage Cloud Computing Benefits with Serverless Multi-Cloud ML

Linda Mohamed

23:19

23:19End the Monolith! Lessons learned adopting Serverless

Nočnica Fee

52:37

52:37Multilingual NLP pipeline up and running from scratch

Kateryna Hrytsaienko

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

27:23

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

48:07

48:07Server Side Serverless in Swift

Sebastien Stormacq

Related Articles

View all articles.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Barcelona Supercomputing Center

Barcelona, Spain

Intermediate

Python

PyTorch

Machine Learning

Barone, Budge & Dominick (Pty) Ltd

Amsterdam, Netherlands

Senior

Python

Machine Learning

score4more GmbH

Berlin, Germany

Remote

Intermediate

API

Scrum

React

DevOps

+8

Language Services Ltd

Glasgow, United Kingdom

Remote

£75-100K

Senior

Machine Learning

Microsoft Dynamics

Deloitte

Leipzig, Germany

Azure

DevOps

Python

Docker

PyTorch

+6

AWS EMEA SARL (UK Branch)

Manchester, United Kingdom

Senior

Keras

Python

PyTorch

Terraform

TensorFlow

+2