PySpark Big Data Engineer

Staffworx Ltd

Charing Cross, United Kingdom

2 days ago

Role details

Contract type

Permanent contract Employment type

Full-time (> 32 hours) Working hours

Regular working hours Languages

EnglishJob location

Remote

Charing Cross, United Kingdom

Tech stack

Airflow

Amazon Web Services (AWS)

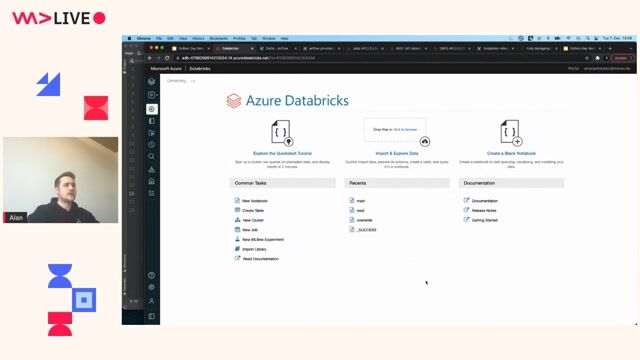

Azure

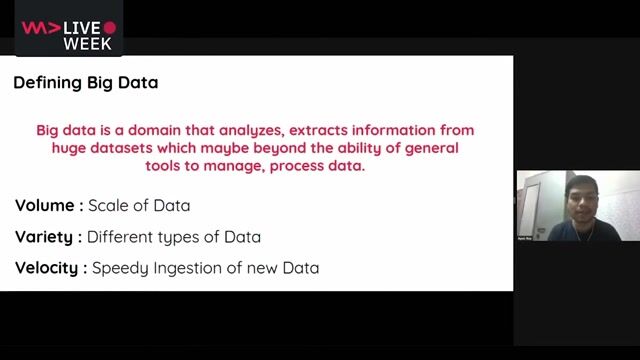

Big Data

Information Engineering

DevOps

Python

NoSQL

SQL Databases

Data Ingestion

Spark

GIT

PySpark

Kubernetes

Terraform

Data Pipelines

Amazon Web Services (AWS)

Docker

Jenkins

Requirements

- PySpark Data Engineering experience

- Python, Spark, SQL & noSQL experience experience

- Data Engineering tech

- Big Data, Apache Airflow workflow automation for data pipelines

- AWS ideally or GCP, Azure

- AWS Services, AWS EMR clusters

- DevOps culture, jenkins, k8s, terraform, helm, docker

- TDD/BDD methodologies, Git

- Data ingestion, data pipelines (Apache Airflow workflow automation)

About the company

This advert was posted by Staffworx Limited - a UK based recruitment consultancy supporting the global E-commerce, software & consulting sectors. Services advertised by Staffworx are those of an Agency and/or an Employment Business.

Staffworx operate a referral scheme of £500 or new iPad for each successfully referred candidate, if you know of someone suitable please forward for consideration