Ayon Roy

PySpark - Combining Machine Learning & Big Data

#1about 3 minutes

Combining big data and machine learning for business insights

The exponential growth of data necessitates combining big data processing with machine learning to personalize user experiences and drive revenue.

#2about 3 minutes

An introduction to the Apache Spark analytics engine

Apache Spark is a unified analytics engine for large-scale data processing that provides high-level APIs and specialized libraries like Spark SQL and MLlib.

#3about 4 minutes

Understanding Spark's core data APIs and abstractions

Spark's data abstractions evolved from the low-level Resilient Distributed Dataset (RDD) to the more optimized and user-friendly DataFrame and Dataset APIs.

#4about 11 minutes

How the Spark cluster architecture enables parallel processing

Spark's architecture uses a driver program to coordinate tasks across a cluster manager and multiple worker nodes, which run executors to process data in parallel.

#5about 5 minutes

Using Python with Spark through the PySpark library

PySpark provides a Python API for Spark, using the Py4J library to communicate between the Python process and Spark's core JVM environment.

#6about 5 minutes

Exploring the key features of the Spark MLlib library

Spark's MLlib offers a comprehensive toolkit for machine learning, including pre-built algorithms, featurization tools, pipelines for workflow management, and model persistence.

#7about 4 minutes

The standard workflow for machine learning in PySpark

A typical machine learning workflow in Spark involves using DataFrames, applying Transformers for feature engineering, training a model with an Estimator, and orchestrating these steps with a Pipeline.

#8about 3 minutes

Pre-built algorithms and utilities available in Spark MLlib

MLlib includes a variety of common, pre-built algorithms for classification, regression, and clustering, such as logistic regression, SVM, and K-means clustering.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

WALTER GROUP

Wiener Neudorf, Austria

Intermediate

Senior

Python

Data Vizualization

+1

Matching moments

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

04:09 MIN

How Python became the dominant language for AI

AI in the Open and in Browsers - Tarek Ziadé

01:54 MIN

The growing importance of data and technology in HR

From Data Keeper to Culture Shaper: The Evolution of HR Across Growth Stages

03:07 MIN

Final advice for developers adapting to AI

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

01:32 MIN

Organizing a developer conference for 15,000 attendees

Cat Herding with Lions and Tigers - Christian Heilmann

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

04:27 MIN

Moving beyond headcount to solve business problems

What 2025 Taught Us: A Year-End Special with Hung Lee

Featured Partners

Related Videos

57:46

57:46Overview of Machine Learning in Python

Adrian Schmitt

43:57

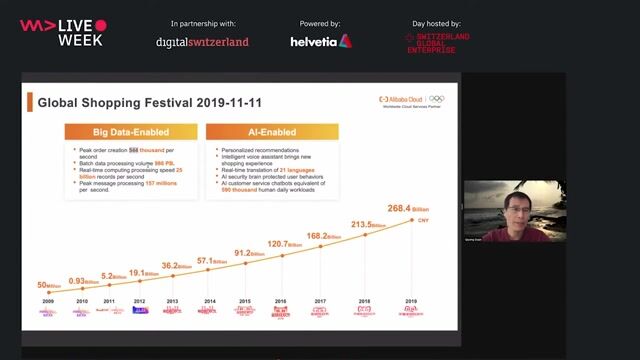

43:57Alibaba Big Data and Machine Learning Technology

Dr. Qiyang Duan

39:14

39:14Fully Orchestrating Databricks from Airflow

Alan Mazankiewicz

58:21

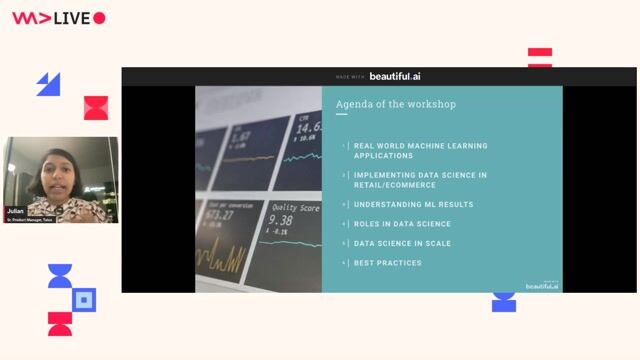

58:21Data Science in Retail

Julian Joseph

39:04

39:04Python-Based Data Streaming Pipelines Within Minutes

Bobur Umurzokov

46:43

46:43Convert batch code into streaming with Python

Bobur Umurzokov

1:09:49

1:09:49Inside the AI Revolution: How Microsoft is Empowering the World to Achieve More

Simi Olabisi

58:06

58:06From Syntax to Singularity: AI’s Impact on Developer Roles

Anna Fritsch-Weninger

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Krell Consulting & Training

Municipality of Madrid, Spain

Spark

Data Lake

Elasticsearch

ON Data Staffing

Senior

Spark

PyTorch

TensorFlow

Machine Learning

Client Server

Newcastle upon Tyne, United Kingdom

Remote

£90-120K

Hive

Azure

Spark

+3

UCASE CONSULTING

Paris, France

Senior

Spark

Python

Unit Testing

Amazon Web Services (AWS)

JPMorgan Chase & Co.

Glasgow, United Kingdom

Senior

NoSQL

Python

PySpark

Machine Learning

Agile Methodologies

+2

Grupo TECDATA Engineering

Málaga, Spain

Intermediate

Spark

Amazon Web Services (AWS)