Jodie Burchell

Lies, Damned Lies and Large Language Models

#1about 2 minutes

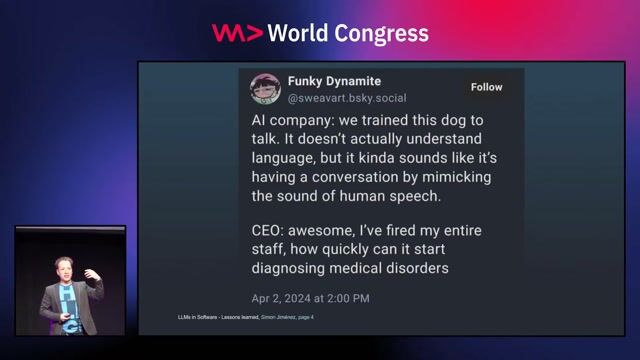

Understanding the dual nature of large language models

LLMs can generate both creative, coherent text and factually incorrect "hallucinations," posing a significant challenge for real-world applications.

#2about 4 minutes

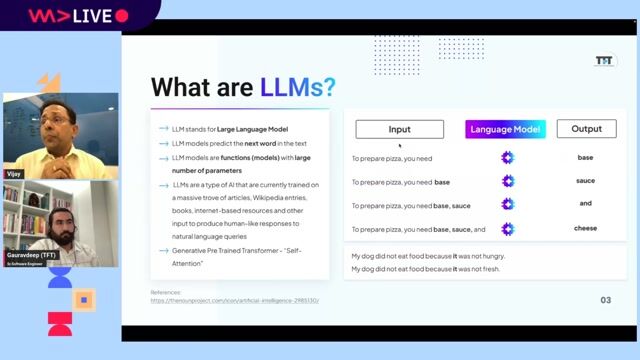

The architecture and evolution of LLMs

The combination of the scalable Transformer architecture and massive text datasets enables models like GPT to develop "parametric knowledge" as they grow in size.

#3about 3 minutes

How training data quality influences model behavior

The quality of web-scraped datasets like Common Crawl, even after filtering, directly contributes to model hallucinations by embedding misinformation.

#4about 2 minutes

Differentiating between faithfulness and factuality hallucinations

Hallucinations are categorized as either faithfulness errors, which contradict a given source text, or factuality errors, which stem from incorrect learned knowledge.

#5about 3 minutes

Using the TruthfulQA dataset to measure misinformation

The TruthfulQA dataset provides a benchmark for measuring an LLM's tendency to repeat common misconceptions and conspiracy theories across various categories.

#6about 6 minutes

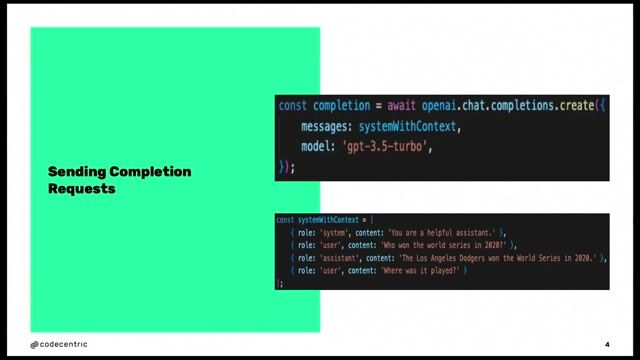

A practical guide to benchmarking LLM hallucinations

A step-by-step demonstration shows how to use Python, LangChain, and Hugging Face Datasets to run the TruthfulQA benchmark on a model like GPT-3.5 Turbo.

#7about 4 minutes

Exploring strategies to reduce LLM hallucinations

Key techniques to mitigate hallucinations include careful prompt crafting, domain-specific fine-tuning, output evaluation, and retrieval-augmented generation (RAG).

#8about 4 minutes

A deep dive into retrieval-augmented generation

RAG reduces hallucinations by augmenting prompts with relevant, up-to-date information retrieved from a vector database of document embeddings.

#9about 2 minutes

Overcoming challenges with advanced RAG techniques

Naive RAG can fail due to poor retrieval or generation, but advanced methods like Rowan selectively apply retrieval to significantly improve factuality.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Matching moments

07:43 MIN

Writing authentic content in the age of LLMs

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

03:55 MIN

The hardware requirements for running LLMs locally

AI in the Open and in Browsers - Tarek Ziadé

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

04:17 MIN

Playing a game of real or fake tech headlines

WeAreDevelopers LIVE – You Don’t Need JavaScript, Modern CSS and More

00:59 MIN

Distinguishing real from fake tech headlines

Fake or News: Coding on a Phone, Emotional Support Toasters, ChatGPT Weddings and more - Anselm Hannemann

01:15 MIN

Crypto crime, EU regulation, and working while you sleep

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

Featured Partners

Related Videos

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

42:26

42:26How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

Meta Atamel & Guillaume Laforge

29:11

29:11Large Language Models ❤️ Knowledge Graphs

Michael Hunger

24:42

24:42Martin O'Hanlon - Make LLMs make sense with GraphRAG

Martin O'Hanlon

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

27:35

27:35Give Your LLMs a Left Brain

Stephen Chin

31:12

31:12Using LLMs in your Product

Daniel Töws

Related Articles

View all articles

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Hyperproof

Municipality of Madrid, Spain

€45K

Machine Learning

Envirorec

Barcelona, Spain

Remote

€50-75K

Azure

Python

Machine Learning

+1

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

Envirorec

Municipality of Madrid, Spain

Remote

€50-75K

Azure

Python

Machine Learning

+1

Apple Inc.

Cambridge, United Kingdom

C++

Java

Bash

Perl

Python

+4

European Tech Recruit

Municipality of Zaragoza, Spain

Junior

Python

Docker

PyTorch

Computer Vision

Machine Learning

+1