Vijay Krishan Gupta & Gauravdeep Singh Lotey

Creating Industry ready solutions with LLM Models

#1about 3 minutes

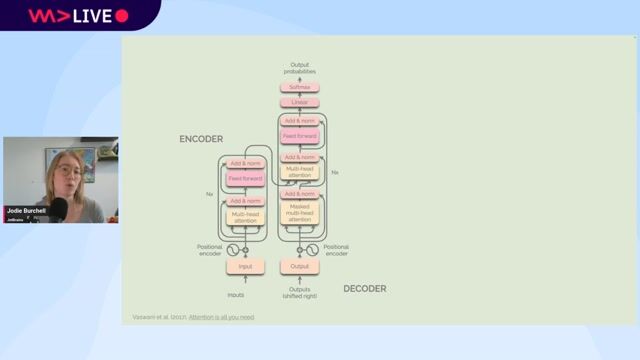

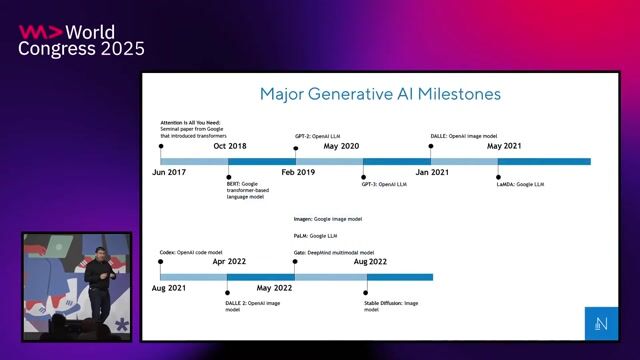

Understanding LLMs and the transformer self-attention mechanism

Large Language Models (LLMs) are defined by their parameters and training data, with the transformer's self-attention mechanism being key to resolving ambiguity in language.

#2about 4 minutes

Exploring the business adoption and emergent abilities of LLMs

Businesses are rapidly adopting LLMs due to their emergent abilities like in-context learning, instruction following, and chain-of-thought reasoning, which go beyond their original design.

#3about 9 minutes

Demo of an enterprise assistant for integrated systems

The Simplify Path demo showcases a unified chatbot interface that integrates with various enterprise systems like HRMS, Jira, and Salesforce for both informational queries and transactional tasks.

#4about 3 minutes

Demo of a document compliance checker for pharmaceuticals

The Doc Compliance tool validates pharmaceutical documents against a source-of-truth compliance document to ensure all parameters meet regulatory requirements.

#5about 3 minutes

Demo of a chatbot builder for any website

Web Water is a product that converts any website into an interactive chatbot by scraping its HTML, text, and media content to answer user questions.

#6about 5 minutes

Navigating the common challenges of building with LLMs

Key challenges in developing LLM applications include managing hallucinations, ensuring data privacy for sensitive industries, improving usability, and addressing the lack of repeatability.

#7about 7 minutes

Using prompt optimization to improve LLM usability

Prompt optimization techniques, such as defining a role, using zero-shot, few-shot, and chain-of-thought prompting, can significantly improve the quality and relevance of LLM outputs.

#8about 4 minutes

Advanced techniques like RAG, function calling, and fine-tuning

Overcome LLM limitations by using Retrieval-Augmented Generation (RAG) for domain-specific knowledge, function calling for real-time tasks, and fine-tuning for specialized models.

#9about 10 minutes

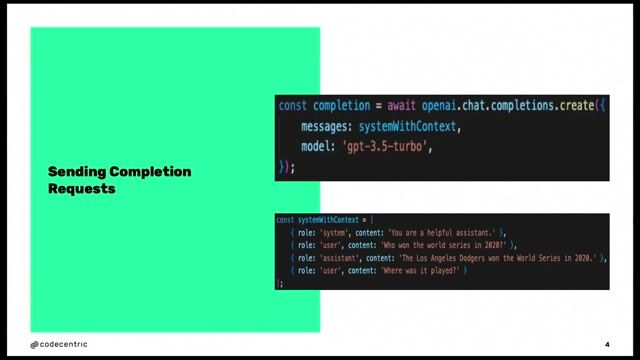

Code walkthrough for building a RAG-based chatbot

A practical code demonstration shows how to build a RAG pipeline using LangChain, ChromaDB for vector storage, and an open-source Llama 2 model to answer questions from a specific document.

#10about 9 minutes

Q&A on integration, offline RAG, and the future of LLMs

The discussion covers integrating LLMs into organizations, running RAG offline, suitability for small businesses, and the evolution towards large action models (LAMs).

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

05:03 MIN

Building and iterating on an LLM-powered product

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

07:43 MIN

Writing authentic content in the age of LLMs

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

03:55 MIN

The hardware requirements for running LLMs locally

AI in the Open and in Browsers - Tarek Ziadé

04:04 MIN

Shifting HR from standard products to AI-powered platforms

Turning People Strategy into a Transformation Engine

03:28 MIN

Why corporate AI adoption lags behind the hype

What 2025 Taught Us: A Year-End Special with Hung Lee

Featured Partners

Related Videos

23:50

23:50Data Privacy in LLMs: Challenges and Best Practices

Aditi Godbole

42:26

42:26How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

Meta Atamel & Guillaume Laforge

31:12

31:12Using LLMs in your Product

Daniel Töws

41:45

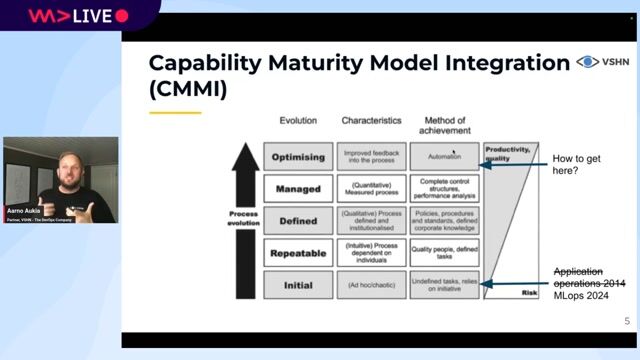

41:45From Traction to Production: Maturing your LLMOps step by step

Maxim Salnikov

26:25

26:25Building Blocks of RAG: From Understanding to Implementation

Ashish Sharma

29:40

29:40Lies, Damned Lies and Large Language Models

Jodie Burchell

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

34:21

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

Related Articles

View all articles

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Starion Group

Municipality of Madrid, Spain

API

CSS

Python

Docker

Machine Learning

+1

Leading Enterprise Ai & Llm Company

Woking, United Kingdom

Remote

£75-100K

Senior

PyTorch

Machine Learning

Leading Enterprise Ai & Llm Company

Abbas and Templecombe, United Kingdom

Remote

£75-100K

Senior

PyTorch

Machine Learning

European Tech Recruit

Municipality of Zaragoza, Spain

Junior

Python

Docker

PyTorch

Computer Vision

Machine Learning

+1

Envirorec

Barcelona, Spain

Remote

€50-75K

Azure

Python

Machine Learning

+1

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

Leading Enterprise Ai & Llm Company

Derby, United Kingdom

Remote

£75-100K

Senior

PyTorch

Machine Learning