Maxim Salnikov

From Traction to Production: Maturing your LLMOps step by step

#1about 1 minute

Understanding the business motivation for adopting AI solutions

AI investments show a significant return on investment, typically yielding three to five dollars back for every dollar spent within about 14 months.

#2about 4 minutes

Overcoming the common challenges in generative AI adoption

Key obstacles to adopting generative AI include the rapid pace of innovation, the need for specialized expertise, data integration complexity, and difficulties in evaluation and operationalization.

#3about 3 minutes

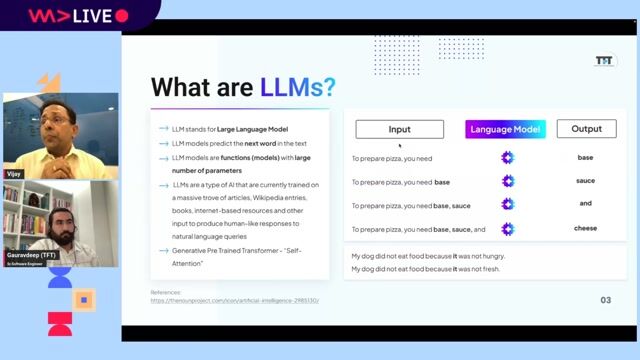

Defining LLMOps and understanding its core benefits

LLMOps is a specialized discipline, similar to DevOps, that combines people, processes, and platforms to automate and manage the lifecycle of LLM-infused applications.

#4about 3 minutes

Differentiating between LLMOps and traditional MLOps

LLMOps focuses on application developers and assets like prompts and APIs, whereas MLOps is geared towards data scientists and focuses on building and training models from scratch.

#5about 5 minutes

Exploring the complete lifecycle of an LLM application

The LLM application lifecycle involves iterative cycles of ideation, building with prompt engineering and RAG, and operationalization, all governed by security and compliance.

#6about 5 minutes

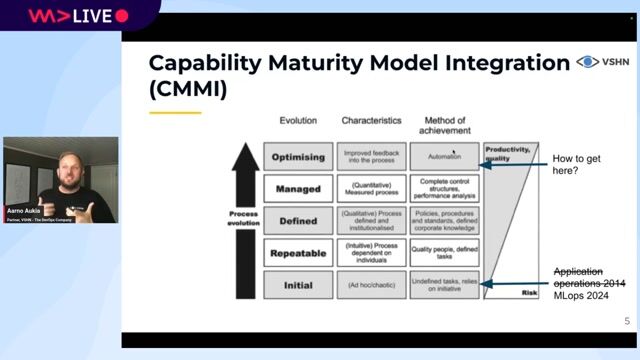

Navigating the four stages of the LLMOps maturity model

The LLMOps maturity model progresses from an initial, manual stage to developing, managed, and finally an optimized stage with full automation and continuous improvement.

#7about 5 minutes

Introducing the Azure AI platform for end-to-end LLMOps

Azure AI provides a comprehensive suite of tools, including the Azure AI Foundry, to support the entire LLM lifecycle from model selection to deployment and governance.

#8about 3 minutes

Using Azure AI for model selection and benchmarking

The Azure AI model catalog offers over 1,800 models and includes powerful benchmarking tools to compare them based on quality, cost, latency, and throughput.

#9about 5 minutes

Building applications with RAG and Azure Prompt Flow

Azure AI Search facilitates retrieval-augmented generation (RAG), while the open-source Prompt Flow framework helps orchestrate, evaluate, and manage complex LLM workflows.

#10about 5 minutes

Deploying and monitoring flows with Azure AI tools

Azure AI enables the deployment of Prompt Flow workflows as scalable endpoints and includes tools for fine-tuning, content safety filtering, and comprehensive monitoring of cost and performance.

#11about 2 minutes

How to assess and advance your LLMOps maturity

To mature your LLMOps practices, start by assessing your current stage, understanding the application lifecycle, and selecting the right tools like Azure AI Foundry.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Matching moments

05:03 MIN

Building and iterating on an LLM-powered product

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

06:28 MIN

Using AI agents to modernize legacy COBOL systems

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

03:07 MIN

Final advice for developers adapting to AI

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

Featured Partners

Related Videos

25:27

25:27From Traction to Production: Maturing your GenAIOps step by step

Maxim Salnikov

34:21

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

58:00

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

47:19

47:19The state of MLOps - machine learning in production at enterprise scale

Bas Geerdink

42:26

42:26How to Avoid LLM Pitfalls - Mete Atamel and Guillaume Laforge

Meta Atamel & Guillaume Laforge

19:27

19:27How E.On productionizes its AI model & Implementation of Secure Generative AI.

Kapil Gupta

1:09:49

1:09:49Inside the AI Revolution: How Microsoft is Empowering the World to Achieve More

Simi Olabisi

29:20

29:20LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

Anshul Jindal

Related Articles

View all articles.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Barone, Budge & Dominick (Pty) Ltd

Amsterdam, Netherlands

Senior

Python

Machine Learning

Agenda GmbH

Remote

Intermediate

API

Azure

Python

Docker

+10

Envirorec

Barcelona, Spain

Remote

€50-75K

Azure

Python

Machine Learning

+1

Envirorec

Municipality of Madrid, Spain

Remote

€50-75K

Azure

Python

Machine Learning

+1

European Tech Recruit

Municipality of Zaragoza, Spain

Junior

Python

Docker

PyTorch

Computer Vision

Machine Learning

+1

cinemo GmbH

Karlsruhe, Germany

Senior

C++

Linux

Python

PyTorch

Machine Learning

+2