Anshul Jindal

LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

#1about 6 minutes

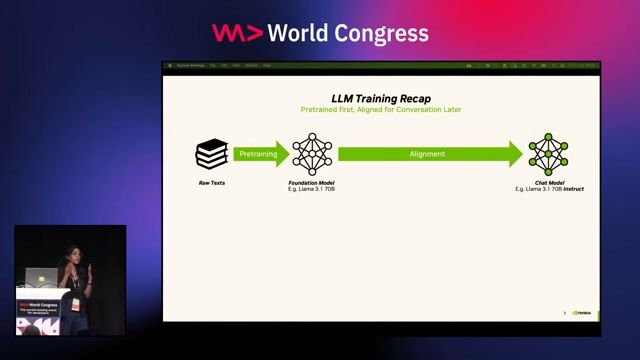

Understanding the GenAI lifecycle and its operational challenges

The continuous cycle of data processing, model customization, and deployment for GenAI applications creates production complexities like a lack of standardized CI/CD and versioning.

#2about 2 minutes

Breaking down the structured stages of an LLMOps pipeline

An effective LLMOps process moves a model from an experimental proof-of-concept through evaluation, pre-production testing, and finally to a production environment.

#3about 4 minutes

Introducing the NVIDIA NeMo microservices and ecosystem tools

NVIDIA provides a suite of tools including NeMo Curator, Customizer, Evaluator, and NIM, which integrate with ecosystem components like Argo Workflows and Argo CD for a complete LLMOps solution.

#4about 4 minutes

Using NeMo Customizer and Evaluator for model adaptation

NeMo Customizer and Evaluator simplify model adaptation through API requests that trigger fine-tuning on custom datasets and benchmark the resulting model's performance.

#5about 3 minutes

Deploying and scaling models with NVIDIA NIM on Kubernetes

NVIDIA NIM packages models into optimized inference containers that can be deployed and auto-scaled on Kubernetes using the NIM operator, with support for multiple fine-tuned adapters.

#6about 4 minutes

Automating complex LLM workflows with Argo Workflows

Argo Workflows enables the creation of automated, multi-step pipelines by stitching together containerized tasks for data processing, model customization, evaluation, and deployment.

#7about 3 minutes

Implementing a GitOps approach for end-to-end LLMOps

Using Git as the single source of truth, Argo CD automates the deployment and management of all LLMOps components, including microservices and workflows, onto Kubernetes clusters.

#8about 3 minutes

Demonstrating the automated LLMOps pipeline in action

A practical demonstration shows how Argo CD manages deployed services and how a data scientist can launch a complete fine-tuning workflow through the Argo Workflows UI, with results tracked in MLflow.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

03:55 MIN

The hardware requirements for running LLMs locally

AI in the Open and in Browsers - Tarek Ziadé

05:03 MIN

Building and iterating on an LLM-powered product

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

06:28 MIN

Using AI agents to modernize legacy COBOL systems

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

04:28 MIN

Building an open source community around AI models

AI in the Open and in Browsers - Tarek Ziadé

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

Featured Partners

Related Videos

34:21

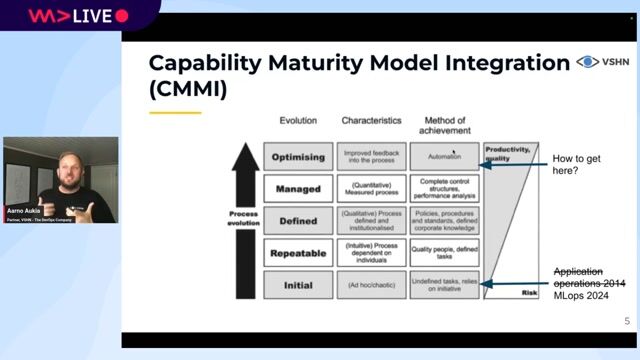

34:21DevOps for AI: running LLMs in production with Kubernetes and KubeFlow

Aarno Aukia

23:01

23:01Efficient deployment and inference of GPU-accelerated LLMs

Adolf Hohl

25:35

25:35Adding knowledge to open-source LLMs

Sergio Perez & Harshita Seth

41:45

41:45From Traction to Production: Maturing your LLMOps step by step

Maxim Salnikov

31:02

31:02MLOps on Kubernetes: Exploring Argo Workflows

Hauke Brammer

29:24

29:24MLOps - What’s the deal behind it?

Nico Axtmann

45:45

45:45Effective Machine Learning - Managing Complexity with MLOps

Simon Stiebellehner

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

Related Articles

View all articles.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

Barone, Budge & Dominick (Pty) Ltd

Amsterdam, Netherlands

Senior

Python

Machine Learning

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Salve.Inno Consulting

Municipality of Madrid, Spain

Senior

DevOps

Python

Gitlab

Docker

Grafana

+7

Finanz Informatik GmbH & Co. KG

Münster, Germany

Senior

API

Flask

Spark

Python

Docker

+5

cinemo GmbH

Karlsruhe, Germany

Senior

C++

Linux

Python

PyTorch

Machine Learning

+2

Envirorec

Barcelona, Spain

Remote

€50-75K

Azure

Python

Machine Learning

+1