Dieter Flick

Building Real-Time AI/ML Agents with Distributed Data using Apache Cassandra and Astra DB

#1about 3 minutes

Introducing the DataStax real-time data cloud

The platform combines Apache Cassandra, Apache Pulsar, and Kaskada to provide a flexible database, streaming, and machine learning solution for developers.

#2about 3 minutes

Interacting with Astra DB using GraphQL and REST APIs

A live demonstration shows how to create a schema, ingest data, and query tables in Astra DB using both GraphQL and REST API endpoints.

#3about 1 minute

Understanding real-time AI and its applications

Real-time AI leverages the most recent data to power predictive analytics and automated actions, as seen in use cases from Uber and Netflix.

#4about 2 minutes

What is Retrieval Augmented Generation (RAG)?

RAG is a pattern that allows large language models to access and use your proprietary, up-to-date data to provide contextually relevant responses.

#5about 3 minutes

Key steps for building a generative AI agent

The process involves defining the agent's purpose, choosing an LLM, selecting context data, picking an embedding model, and performing prompt engineering.

#6about 3 minutes

Exploring the architecture of a RAG system

A RAG system uses a vector database to perform a similarity search on data embeddings, finding relevant context to enrich the prompt sent to the LLM.

#7about 3 minutes

Generating vector embeddings from text content

A Jupyter Notebook demonstrates splitting source text into chunks and using an embedding model to create vector representations for storage and search.

#8about 4 minutes

The end-to-end data flow of a RAG query

A user's question is converted into an embedding, used for a similarity search in the vector store, and the results are combined with other context to build a final prompt.

#9about 3 minutes

Executing a RAG prompt to get an LLM response

The demo shows how the context-enriched prompt is sent to an LLM to generate a relevant answer, including how to add memory for conversational history.

#10about 3 minutes

Getting started with the Astra DB vector database

Resources are provided for getting started with Astra DB, including quick starts, a free tier for developers, and information on multi-cloud region support.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

msg

Ismaning, Germany

Intermediate

Senior

Data analysis

Cloud (AWS/Google/Azure)

Matching moments

06:28 MIN

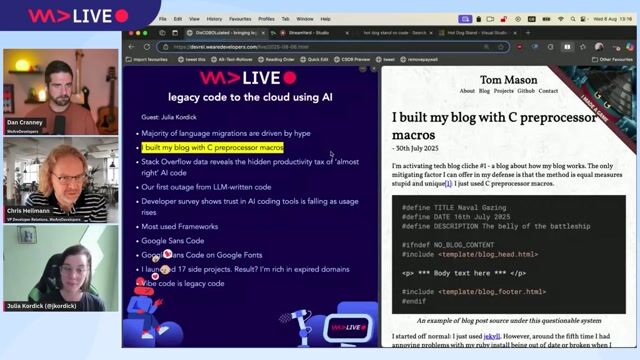

Using AI agents to modernize legacy COBOL systems

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

04:06 MIN

Using AI to enable human connection in recruiting

Retention Over Attraction: A New Employer Branding Mindset

03:28 MIN

Why corporate AI adoption lags behind the hype

What 2025 Taught Us: A Year-End Special with Hung Lee

03:15 MIN

The future of recruiting beyond talent acquisition

What 2025 Taught Us: A Year-End Special with Hung Lee

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

06:33 MIN

The security challenges of building AI browser agents

AI in the Open and in Browsers - Tarek Ziadé

02:49 MIN

Using AI to overcome challenges in systems programming

AI in the Open and in Browsers - Tarek Ziadé

Featured Partners

Related Videos

28:57

28:57Accelerating GenAI Development: Harnessing Astra DB Vector Store and Langflow for LLM-Powered Apps

Dieter Flick & Michel de Ru

28:04

28:04Build RAG from Scratch

Phil Nash

21:17

21:17Carl Lapierre - Exploring Advanced Patterns in Retrieval-Augmented Generation

Carl Lapierre

26:25

26:25Building Blocks of RAG: From Understanding to Implementation

Ashish Sharma

31:59

31:59Langchain4J - An Introduction for Impatient Developers

Juarez Junior

46:51

46:51Graphs and RAGs Everywhere... But What Are They? - Andreas Kollegger - Neo4j

26:42

26:42Building AI Applications with LangChain and Node.js

Julián Duque

29:11

29:11Large Language Models ❤️ Knowledge Graphs

Michael Hunger

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

Barcelona Supercomputing Center

Barcelona, Spain

€50K

DevOps

Gitlab

Docker

Grafana

+2

QAD Inc.

Barcelona, Spain

ETL

Azure

Python

Amazon Web Services (AWS)

Zendesk

Berlin, Germany

Remote

API

Python

FastAPI

Machine Learning

+1

Databricks

Amsterdam, Netherlands

Intermediate

C++

Python

PyTorch

TensorFlow

Machine Learning