Artem Volk & Fabian Zillgens

Building the platform for providing ML predictions based on real-time player activity

#1about 3 minutes

Customizing the player experience in real time

The business goal is to use real-time player activity to deliver personalized in-game content, such as customized store offers.

#2about 3 minutes

Designing the high-level system architecture

The platform follows a three-stage architecture for event collection, data processing, and customization delivery using a standard AWS tech stack.

#3about 2 minutes

Building a resilient event collection pipeline

A slim API endpoint ingests high-volume, potentially out-of-order player events and uses an Amazon Kinesis stream to decouple it from downstream processing.

#4about 2 minutes

Separating offline and online data processing

The system uses a dual-path approach, with Apache Spark for offline analytics and Apache Flink with Flink SQL for real-time feature extraction.

#5about 2 minutes

Creating a low-latency user profile service

A user profile API stores a real-time snapshot of the player's state, updated by the Flink stream with a latency of around 200 milliseconds.

#6about 3 minutes

Delivering customizations via decoupled ML models

Machine learning models are deployed as independent AWS Lambda functions that data scientists can manage, allowing the game to pull personalized content on demand.

#7about 5 minutes

Analyzing system latency and architectural trade-offs

Empowering data scientists with monitoring tools reveals end-to-end latency metrics and highlights the advantages and costs of a highly decoupled system.

#8about 2 minutes

Implementing AWS cost optimization strategies

Costs are managed through techniques like event batching, data compression, aggressive Kinesis autoscaling, and S3 data partitioning and storage classes.

#9about 7 minutes

Q&A on model quality, scale, and player privacy

The team answers audience questions about event volume, ensuring model quality, load balancing, using AWS ML services, and handling player data privacy.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Senior

Java

Amazon Web Services (AWS)

+1

zeb consulting

Frankfurt am Main, Germany

Remote

Junior

Intermediate

Senior

Amazon Web Services (AWS)

Cloud Architecture

+1

Matching moments

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

04:17 MIN

Playing a game of real or fake tech headlines

WeAreDevelopers LIVE – You Don’t Need JavaScript, Modern CSS and More

14:06 MIN

Exploring the role and ethics of AI in gaming

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

05:03 MIN

Building and iterating on an LLM-powered product

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

04:04 MIN

Shifting HR from standard products to AI-powered platforms

Turning People Strategy into a Transformation Engine

05:17 MIN

Shifting from traditional CVs to skill-based talent management

From Data Keeper to Culture Shaper: The Evolution of HR Across Growth Stages

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

03:28 MIN

Why corporate AI adoption lags behind the hype

What 2025 Taught Us: A Year-End Special with Hung Lee

Featured Partners

Related Videos

25:48

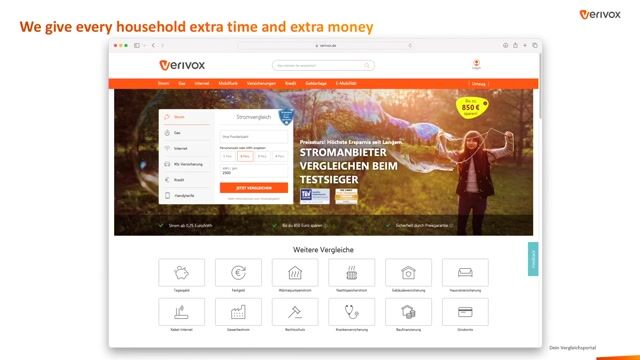

25:48The Road to MLOps: How Verivox Transitioned to AWS

Elisabeth Günther

29:26

29:26Empowering Thousands of Developers: Our Journey to an Internal Developer Platform

Bastian Heilemann & Bruno Margula

22:41

22:41Empowering Retail Through Applied Machine Learning

Christoph Fassbach & Daniel Rohr

30:07

30:07Lessons Learned Building a GenAI Powered App

Mete Atamel

22:24

22:24Reliable scalability: How Amazon.com scales on AWS

Florian Mair

48:54

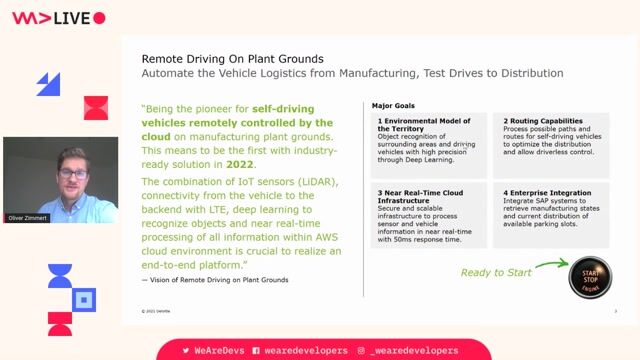

48:54Remote Driving on Plant Grounds with State-of-the-Art Cloud Technologies

Oliver Zimmert

24:22

24:22Database Magic behind 40 Million operations/s

Jürgen Pilz

28:12

28:12Scaling: from 0 to 20 million users

Josip Stuhli

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

Almedia

Berlin, Germany

Intermediate

Senior

Python

PyTorch

PostgreSQL

TensorFlow

Scikit-learn

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

React

DevOps

Next.js

TypeScript

Cloud (AWS/Google/Azure)

trivago

Düsseldorf, Germany

Senior

MySQL

Kafka

Python

Google BigQuery

Google Cloud Platform

Europa-Park GmbH & Co Mack KG

DevOps

Grafana

Terraform

Prometheus

Kubernetes

Amazon.com, Inc.

Municipality of Madrid, Spain

Machine Learning

Microsoft Office

Amazon Web Services (AWS)

GIOS Technology

Knutsford, United Kingdom

£117K

API

Kafka

DevOps

Gitlab

+10