Jan Curn

How to scrape modern websites to feed AI agents

#1about 1 minute

Why web data is essential for training large language models

LLMs are trained on massive web datasets like Common Crawl, but this leads to knowledge cutoffs and hallucinations.

#2about 2 minutes

How RAG provides LLMs with up-to-date context

Retrieval-Augmented Generation (RAG), or context engineering, feeds external, live data to LLMs to produce more accurate and timely answers.

#3about 3 minutes

Navigating the complexities of modern web scraping

Modern websites use dynamic JavaScript rendering and anti-bot measures, requiring headless browsers, proxies, and CAPTCHA solvers to access data.

#4about 2 minutes

Cleaning messy HTML and scaling data extraction

To avoid the 'garbage in, garbage out' problem, you must clean HTML by removing cookie banners and ads, and manage complexities like sitemaps and robots.txt.

#5about 3 minutes

Demo of scraping a website with Apify Actors

A demonstration shows how to use the Apify Website Content Crawler to perform a deep crawl of a website and extract its content into markdown.

#6about 2 minutes

Building a RAG chatbot with scraped data and Pinecone

The scraped website data is uploaded to a Pinecone vector database, enabling a chatbot to answer questions using the site's specific content.

#7about 1 minute

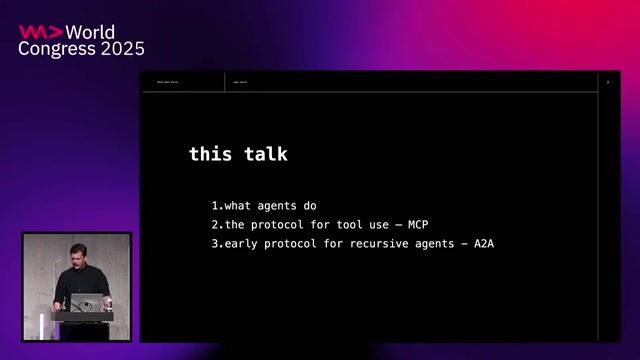

Using the Model Context Protocol for AI agent integration

The Model Context Protocol (MCP) provides a fluid, dynamic interface for AI agents to communicate with and discover tools, unlike static traditional APIs.

#8about 3 minutes

Demo of dynamic tool discovery using MCP

An AI agent uses MCP to dynamically search the Apify store for a Twitter scraper, add it to its context, and then use it to fetch live data.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

06:33 MIN

The security challenges of building AI browser agents

AI in the Open and in Browsers - Tarek Ziadé

06:28 MIN

Using AI agents to modernize legacy COBOL systems

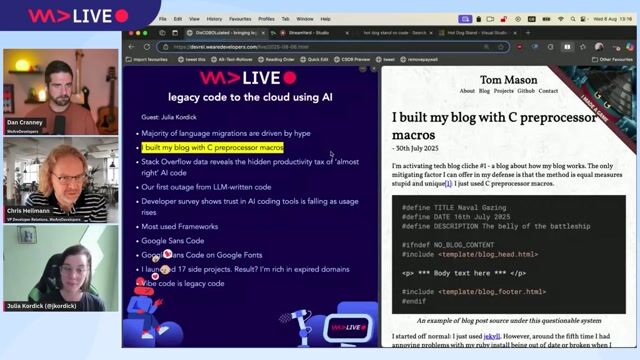

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

06:44 MIN

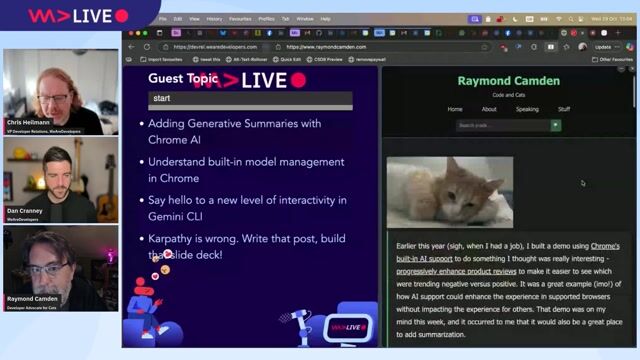

Using Chrome's built-in AI for on-device features

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

04:06 MIN

Using AI to enable human connection in recruiting

Retention Over Attraction: A New Employer Branding Mindset

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

08:29 MIN

How AI threatens the open source documentation business model

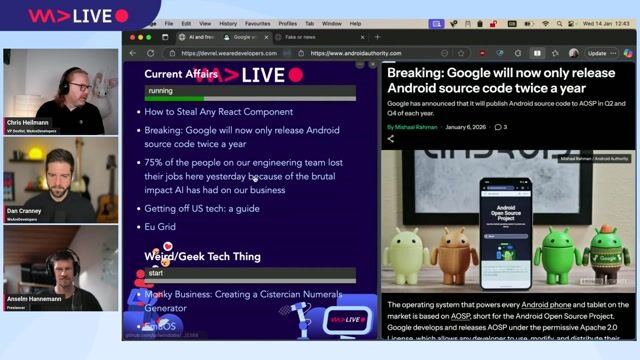

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

14:06 MIN

Exploring the role and ethics of AI in gaming

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

Featured Partners

Related Videos

26:15

26:15Scrape, Train, Predict: The Lifecycle of Data for AI Applications

Vidas Bacevičius

1:06:14

1:06:14WeAreDevelopers LIVE – Web Scraping, Agents, Actors and more

Chris Heilmann, Daniel Cranney, Ondra Urban & COO & GTM at Apify

23:17

23:17MCP Mashups: How AI Agents are Reviving the Programmable Web

Angie Jones

25:32

25:32Beyond Prompting: Building Scalable AI with Multi-Agent Systems and MCP

Viktoria Semaan

21:17

21:17Carl Lapierre - Exploring Advanced Patterns in Retrieval-Augmented Generation

Carl Lapierre

28:04

28:04Build RAG from Scratch

Phil Nash

35:16

35:16How AI Models Get Smarter

Ankit Patel

24:12

24:12From A2A to MCP: How AI’s “Brains” are Connecting to “Arms and Legs”

Brad Axen

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

autonomous-teaming

München, Germany

Remote

API

React

Python

TypeScript

Amazon.com Inc.

XML

HTML

JSON

Python

Data analysis

+1