David vonThenen

Confuse, Obfuscate, Disrupt: Using Adversarial Techniques for Better AI and True Anonymity

#1about 1 minute

The importance of explainable AI and data quality

AI models are only as good as their training data, which is often plagued by bias, noise, and inaccuracies that explainable AI helps to uncover.

#2about 3 minutes

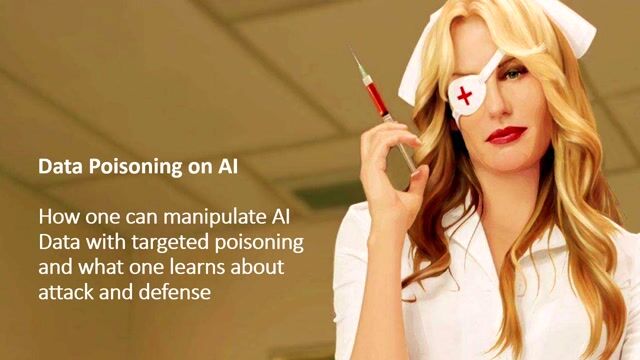

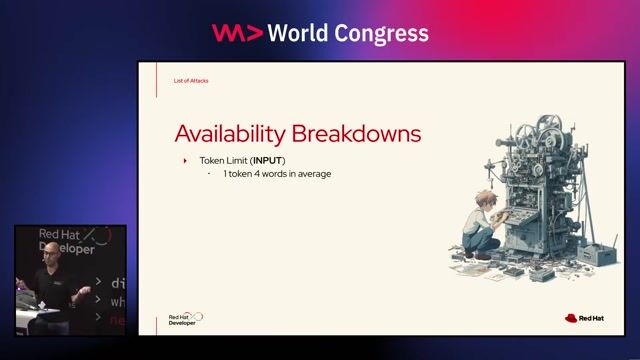

Identifying common data inconsistencies in AI models

Models can be compromised by issues like annotation errors, data imbalance, and adversarial samples, which can be measured with tools like Captum.

#3about 2 minutes

The dual purpose of adversarial AI attacks

Intentionally introducing adversarial inputs can be used for good to test model boundaries, or for bad to obfuscate data and protect personal privacy.

#4about 3 minutes

How to confuse NLP models with creative inputs

Natural language processing models can be disrupted using techniques like encoding, code-switching, misspellings, and even metaphors to prevent accurate interpretation.

#5about 4 minutes

Visualizing model predictions with the Captum library

The Captum library for PyTorch helps visualize which parts of an input, like words in a sentence or pixels in an image, contribute most to a model's final prediction.

#6about 6 minutes

Manipulating model outputs with subtle input changes

Simple misspellings can flip a sentiment analysis result from positive to negative, and adding a single pixel can cause an image classifier to misidentify a cat as a dog.

#7about 2 minutes

Using an adversarial pattern t-shirt to evade detection

A t-shirt printed with a specific adversarial pattern can disrupt a real-time person detection model, effectively making the wearer invisible to the AI system.

#8about 2 minutes

Techniques for defending models against adversarial attacks

Defenses against NLP attacks include normalization and grammar checks, while vision attacks can be mitigated with image blurring, bit-depth reduction, or advanced methods like FGSM.

#9about 2 minutes

Defeating a single-pixel attack with image blurring

Applying a simple Gaussian blur to an image containing an adversarial pixel smooths out the manipulation, allowing the model to correctly classify the image.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

04:17 MIN

Playing a game of real or fake tech headlines

WeAreDevelopers LIVE – You Don’t Need JavaScript, Modern CSS and More

14:06 MIN

Exploring the role and ethics of AI in gaming

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

08:40 MIN

Integrating AI into Firefox while respecting user privacy

AI in the Open and in Browsers - Tarek Ziadé

01:02 MIN

AI lawsuits, code flagging, and self-driving subscriptions

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

05:55 MIN

The security risks of AI-generated code and slopsquatting

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

08:29 MIN

How AI threatens the open source documentation business model

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

01:15 MIN

Crypto crime, EU regulation, and working while you sleep

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

Featured Partners

Related Videos

27:02

27:02Hacking AI - how attackers impose their will on AI

Mirko Ross

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

24:23

24:23A hundred ways to wreck your AI - the (in)security of machine learning systems

Balázs Kiss

30:02

30:02The AI Elections: How Technology Could Shape Public Sentiment

Martin Förtsch & Thomas Endres

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

31:19

31:19Skynet wants your Passwords! The Role of AI in Automating Social Engineering

Wolfgang Ettlinger & Alexander Hurbean

27:10

27:10Manipulating The Machine: Prompt Injections And Counter Measures

Georg Dresler

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Abnormal AI

Intermediate

API

Spark

Kafka

Python

autonomous-teaming

München, Germany

Remote

API

React

Python

TypeScript

Imec

Azure

Python

PyTorch

TensorFlow

Computer Vision

+1

OpenAI

München, Germany

Senior

API

Python

JavaScript

Machine Learning