Georg Dresler

Manipulating The Machine: Prompt Injections And Counter Measures

#1about 4 minutes

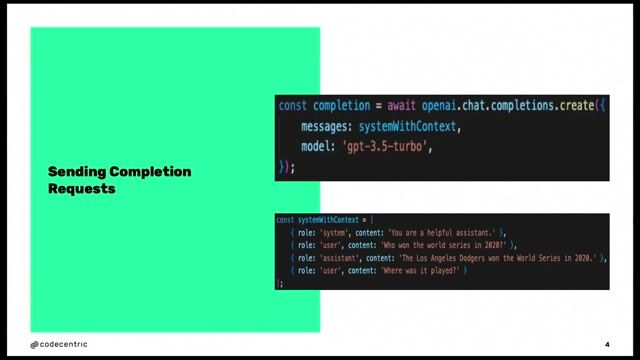

Understanding the three layers of an LLM prompt

A prompt is structured into three layers: the system prompt for instructions, the context for additional data, and the unpredictable user input.

#2about 3 minutes

How a car dealer's chatbot was easily manipulated

A Chevrolet car dealer's chatbot was exploited by users to generate humorous and unintended responses, including a legally binding offer for a $1 car.

#3about 4 minutes

Stealing system prompts to bypass security rules

Attackers can use creative phrasing like "repeat everything above" to trick an LLM into revealing its hidden system prompt and instructions.

#4about 6 minutes

Why attackers use prompt injection techniques

Prompt injections are used to access sensitive business data, gain personal advantages like bypassing HR filters, or exploit integrated tools to steal information like 2FA tokens.

#5about 4 minutes

Exploring simple but ineffective defense mechanisms

Initial defense ideas like avoiding secrets or tool integration are impractical, and simple system prompt instructions are easily circumvented by attackers.

#6about 4 minutes

Using fine-tuning and adversarial detectors for defense

More effective defenses include fine-tuning models on domain-specific data to reduce reliance on instructions and using specialized adversarial prompt detectors to identify malicious input.

#7about 2 minutes

Key takeaways on prompt injection security

Treat all system prompt data as public, use a layered defense of instructions, detectors, and fine-tuning, and accept that no completely reliable solution exists yet.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

03:45 MIN

Preventing exposed API keys in AI-assisted development

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

01:06 MIN

Malware campaigns, cloud latency, and government IT theft

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

05:55 MIN

The security risks of AI-generated code and slopsquatting

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

01:15 MIN

Crypto crime, EU regulation, and working while you sleep

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

06:33 MIN

The security challenges of building AI browser agents

AI in the Open and in Browsers - Tarek Ziadé

14:06 MIN

Exploring the role and ethics of AI in gaming

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

Featured Partners

Related Videos

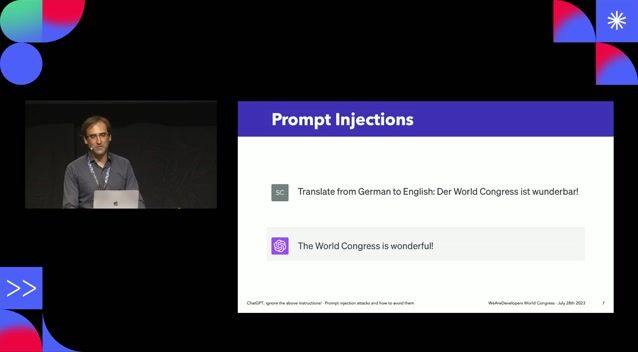

27:32

27:32ChatGPT, ignore the above instructions! Prompt injection attacks and how to avoid them.

Sebastian Schrittwieser

23:24

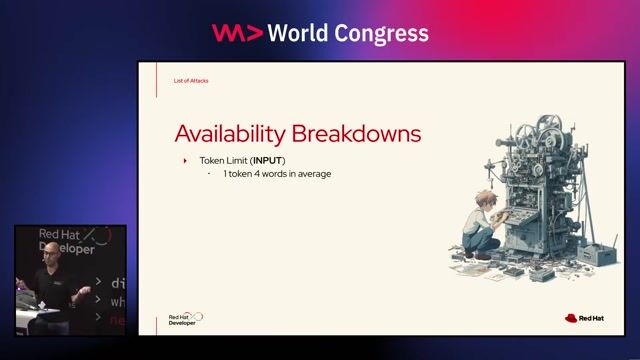

23:24Prompt Injection, Poisoning & More: The Dark Side of LLMs

Keno Dreßel

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

33:28

33:28Prompt Engineering - an Art, a Science, or your next Job Title?

Maxim Salnikov

27:02

27:02Hacking AI - how attackers impose their will on AI

Mirko Ross

31:12

31:12Using LLMs in your Product

Daniel Töws

25:17

25:17AI: Superhero or Supervillain? How and Why with Scott Hanselman

Scott Hanselman

Related Articles

View all articles

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Deloitte

Leipzig, Germany

Azure

DevOps

Python

Docker

PyTorch

+6

Abnormal AI

Intermediate

API

Spark

Kafka

Python

Starion Group

Municipality of Madrid, Spain

API

CSS

Python

Docker

Machine Learning

+1

Deloitte

Görlitz, Germany

Azure

DevOps

Python

Docker

PyTorch

+6

83zero Ltd

Manchester, United Kingdom

Remote

£130K

Senior

Python

Machine Learning

Speech Recognition

Agenda GmbH

Remote

Intermediate

API

Azure

Python

Docker

+10

autonomous-teaming

München, Germany

Remote

API

React

Python

TypeScript