Sebastian Schrittwieser

ChatGPT, ignore the above instructions! Prompt injection attacks and how to avoid them.

#1about 2 minutes

The rapid adoption of LLMs outpaces security practices

New technologies like large language models are often adopted quickly without established security best practices, creating new vulnerabilities.

#2about 4 minutes

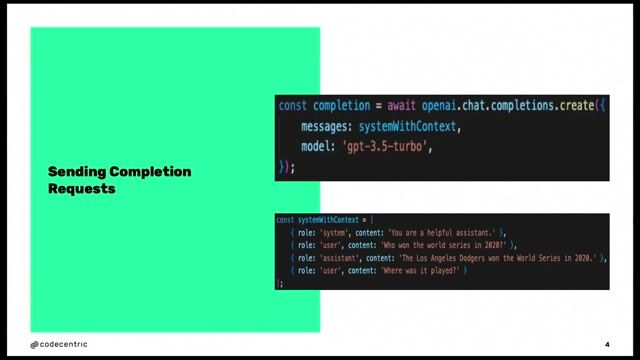

How user input can override developer instructions

A prompt injection occurs when untrusted user input contains instructions that hijack the LLM's behavior, overriding the developer's original intent defined in the context.

#3about 4 minutes

Using prompt injection to steal confidential context data

Attackers can use prompt injection to trick an LLM into revealing its confidential context or system prompt, exposing proprietary logic or sensitive information.

#4about 4 minutes

Expanding the attack surface with plugins and web data

LLM plugins that access external data like emails or websites create an indirect attack vector where malicious prompts can be hidden in that external content.

#5about 2 minutes

Prompt injection as the new SQL injection for LLMs

Prompt injection mirrors traditional SQL injection by mixing untrusted data with developer instructions, but lacks a clear mitigation like prepared statements.

#6about 3 minutes

Why simple filtering and encoding fail to stop attacks

Common security tactics like input filtering and blacklisting are ineffective against prompt injections due to the flexibility of natural language and encoding bypass techniques.

#7about 4 minutes

Using user confirmation and dual LLM models for defense

Advanced strategies include requiring user confirmation for sensitive actions or using a dual LLM architecture to isolate privileged operations from untrusted data processing.

#8about 5 minutes

The current state of LLM security and the need for awareness

There is currently no perfect solution for prompt injection, making developer awareness and careful design of LLM interactions the most critical defense.

Related jobs

Jobs that call for the skills explored in this talk.

Technoly GmbH

Berlin, Germany

€50-60K

Intermediate

Network Security

Security Architecture

+2

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

03:45 MIN

Preventing exposed API keys in AI-assisted development

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

05:55 MIN

The security risks of AI-generated code and slopsquatting

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

01:06 MIN

Malware campaigns, cloud latency, and government IT theft

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

06:33 MIN

The security challenges of building AI browser agents

AI in the Open and in Browsers - Tarek Ziadé

01:15 MIN

Crypto crime, EU regulation, and working while you sleep

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

06:46 MIN

How AI-generated content is overwhelming open source maintainers

WeAreDevelopers LIVE – You Don’t Need JavaScript, Modern CSS and More

Featured Partners

Related Videos

27:10

27:10Manipulating The Machine: Prompt Injections And Counter Measures

Georg Dresler

23:24

23:24Prompt Injection, Poisoning & More: The Dark Side of LLMs

Keno Dreßel

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

35:37

35:37Can Machines Dream of Secure Code? Emerging AI Security Risks in LLM-driven Developer Tools

Liran Tal

31:12

31:12Using LLMs in your Product

Daniel Töws

21:01

21:01Let’s write an exploit using AI

Julian Totzek-Hallhuber

33:28

33:28Prompt Engineering - an Art, a Science, or your next Job Title?

Maxim Salnikov

Related Articles

View all articles

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Siemens AG

München, Germany

API

GIT

Ruby

Docker

Ansible

+4

Abnormal AI

Intermediate

API

Spark

Kafka

Python

Starion Group

Municipality of Madrid, Spain

API

CSS

Python

Docker

Machine Learning

+1

Mindrift

Remote

£41K

Junior

JSON

Python

Data analysis

+1

Snyk's Incubation Accelerator

Charing Cross, United Kingdom

Go

Python

Node.js

Microservices

Agile Methodologies

+1

Recorded Future's Insikt Group

Remote

Senior

Bash

Perl

Linux

Python

+2

Deloitte

Leipzig, Germany

Azure

DevOps

Python

Docker

PyTorch

+6

Prognum Automotive GmbH

Ulm, Germany

Remote

C++