Keno Dreßel

Prompt Injection, Poisoning & More: The Dark Side of LLMs

#1about 5 minutes

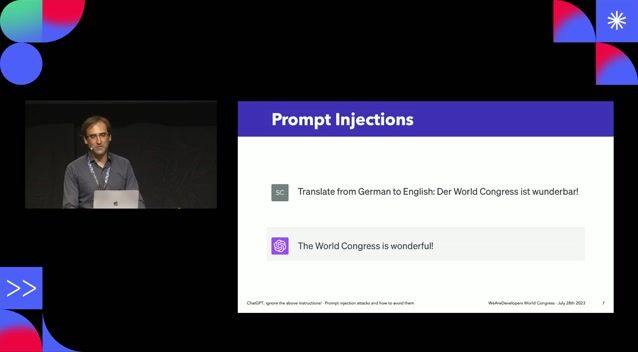

Understanding and mitigating prompt injection attacks

Prompt injection manipulates LLM outputs through direct or indirect methods, requiring mitigations like restricting model capabilities and applying guardrails.

#2about 6 minutes

Protecting against data and model poisoning risks

Malicious or biased training data can poison a model's worldview, necessitating careful data screening and keeping models up-to-date.

#3about 6 minutes

Securing downstream systems from insecure model outputs

LLM outputs can exploit downstream systems like databases or frontends, so they must be treated as untrusted user input and sanitized accordingly.

#4about 4 minutes

Preventing sensitive information disclosure via LLMs

Sensitive data used for training can be extracted from models, highlighting the need to redact or anonymize information before it reaches the LLM.

#5about 1 minute

Why comprehensive security is non-negotiable for LLMs

Just like in traditional application security, achieving 99% security is still a failing grade because attackers will find and exploit any existing vulnerability.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

+3

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Matching moments

07:39 MIN

Prompt injection as an unsolved AI security problem

AI in the Open and in Browsers - Tarek Ziadé

03:45 MIN

Preventing exposed API keys in AI-assisted development

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

04:59 MIN

Unlocking LLM potential with creative prompting techniques

WeAreDevelopers LIVE – Frontend Inspirations, Web Standards and more

05:55 MIN

The security risks of AI-generated code and slopsquatting

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

06:33 MIN

The security challenges of building AI browser agents

AI in the Open and in Browsers - Tarek Ziadé

01:06 MIN

Malware campaigns, cloud latency, and government IT theft

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

01:15 MIN

Crypto crime, EU regulation, and working while you sleep

Fake or News: Self-Driving Cars on Subscription, Crypto Attacks Rising and Working While You Sleep - Théodore Lefèvre

07:43 MIN

Writing authentic content in the age of LLMs

Slopquatting, API Keys, Fun with Fonts, Recruiters vs AI and more - The Best of LIVE 2025 - Part 2

Featured Partners

Related Videos

27:10

27:10Manipulating The Machine: Prompt Injections And Counter Measures

Georg Dresler

29:00

29:00Beyond the Hype: Building Trustworthy and Reliable LLM Applications with Guardrails

Alex Soto

27:32

27:32ChatGPT, ignore the above instructions! Prompt injection attacks and how to avoid them.

Sebastian Schrittwieser

30:36

30:36The AI Security Survival Guide: Practical Advice for Stressed-Out Developers

Mackenzie Jackson

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

35:37

35:37Can Machines Dream of Secure Code? Emerging AI Security Risks in LLM-driven Developer Tools

Liran Tal

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

24:11

24:11You are not my model anymore - understanding LLM model behavior

Andreas Erben

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Xablu

Hengelo, Netherlands

Intermediate

.NET

Python

PyTorch

Blockchain

TensorFlow

+3

Starion Group

Municipality of Madrid, Spain

API

CSS

Python

Docker

Machine Learning

+1

Abnormal AI

Intermediate

API

Spark

Kafka

Python

Robert Ragge GmbH

Senior

API

Python

Terraform

Kubernetes

A/B testing

+3

cinemo GmbH

Karlsruhe, Germany

Senior

C++

Linux

Python

PyTorch

Machine Learning

+2

Anexia Internetdienstleistungs Gmbh

Graz, Austria

€54K

API

DevOps

Python

Docker

+4