Jodie Burchell

A beginner’s guide to modern natural language processing

#1about 5 minutes

Understanding the core challenge of natural language processing

Machine learning models require numerical inputs, so raw text must be converted into a numerical format called a vector or text embedding.

#2about 6 minutes

Exploring bag-of-words methods for text vectorization

Binary and count vectorization create features based on the presence or frequency of words in a document, ignoring their original context.

#3about 4 minutes

How Word2Vec captures word meaning in vector space

The Word2Vec model learns numerical representations for words by analyzing their surrounding context, grouping similar words closer together in a multi-dimensional space.

#4about 5 minutes

Training a Word2Vec model in Python using Gensim

A practical demonstration shows how to clean text data and train a custom Word2Vec model to generate embeddings for a specific vocabulary.

#5about 3 minutes

Creating document embeddings by averaging word vectors

A simple yet effective method to represent an entire document is to retrieve the embedding for each word and calculate their average vector.

#6about 2 minutes

Evaluating the performance of the Word2Vec classifier

The classifier trained on averaged word embeddings achieves 95% accuracy, with errors often occurring on headlines with misleading topics or tones.

#7about 3 minutes

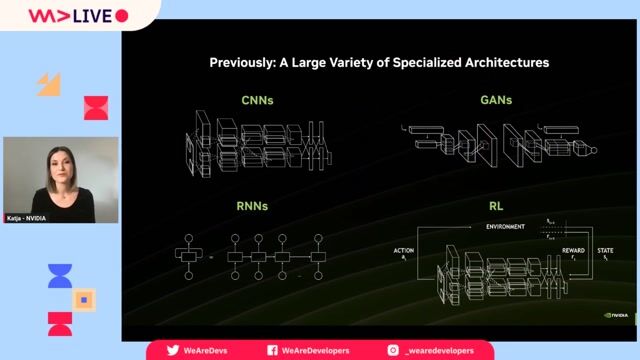

Overcoming context limitations with transformer models

Transformer models use a self-attention mechanism to weigh the importance of other words in a sentence, allowing them to understand a word's meaning in its specific context.

#8about 5 minutes

Understanding how the BERT model is pre-trained

BERT learns a deep understanding of language by being pre-trained on tasks like predicting masked words and determining correct sentence order, enabling it to be fine-tuned for specific applications.

#9about 7 minutes

Fine-tuning a BERT model with the Transformers library

Using the Hugging Face Transformers library, a pre-trained DistilBERT model is fine-tuned for the clickbait classification task, requiring specific tokenization with attention masks.

#10about 2 minutes

Choosing the right text processing model for your task

While the fine-tuned BERT model achieves the highest accuracy at 99%, simpler methods like count vectorization can outperform Word2Vec and may be sufficient depending on the use case.

#11about 2 minutes

Using word embeddings to improve downstream NLP tasks

Word embeddings can be combined with other techniques, such as TF-IDF weighting, to extract more signal and improve performance on tasks like sentiment analysis.

#12about 2 minutes

Addressing overfitting and feature leakage in production

Preventing overfitting involves using validation sets, ensuring representative data samples, and checking for feature leakage where a feature inadvertently reveals the outcome.

#13about 2 minutes

Handling out-of-vocabulary and rare terms in NLP

For rare or out-of-vocabulary terms that models struggle with, symbolic rule-based approaches can be used as a complementary system to handle important edge cases.

#14about 3 minutes

Advice for starting a career in data science

Aspiring data scientists should focus on gaining hands-on experience with real-world datasets and building a portfolio of projects to develop an intuition for common issues.

Related jobs

Jobs that call for the skills explored in this talk.

Picnic Technologies B.V.

Amsterdam, Netherlands

Intermediate

Senior

Python

Structured Query Language (SQL)

+1

Matching moments

02:20 MIN

The evolving role of the machine learning engineer

AI in the Open and in Browsers - Tarek Ziadé

04:57 MIN

Increasing the value of talk recordings post-event

Cat Herding with Lions and Tigers - Christian Heilmann

04:09 MIN

How Python became the dominant language for AI

AI in the Open and in Browsers - Tarek Ziadé

03:07 MIN

Final advice for developers adapting to AI

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

06:44 MIN

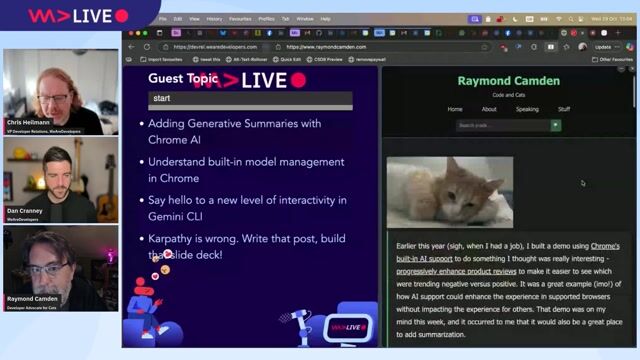

Using Chrome's built-in AI for on-device features

Devs vs. Marketers, COBOL and Copilot, Make Live Coding Easy and more - The Best of LIVE 2025 - Part 3

09:10 MIN

How AI is changing the freelance developer experience

WeAreDevelopers LIVE – AI, Freelancing, Keeping Up with Tech and More

04:28 MIN

Building an open source community around AI models

AI in the Open and in Browsers - Tarek Ziadé

05:17 MIN

Shifting from traditional CVs to skill-based talent management

From Data Keeper to Culture Shaper: The Evolution of HR Across Growth Stages

Featured Partners

Related Videos

52:37

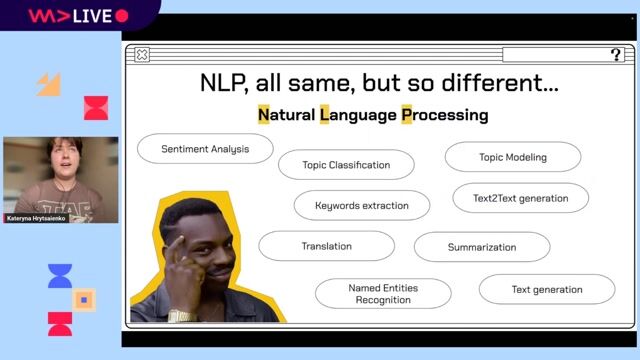

52:37Multilingual NLP pipeline up and running from scratch

Kateryna Hrytsaienko

47:28

47:28What do language models really learn

Tanmay Bakshi

57:46

57:46Overview of Machine Learning in Python

Adrian Schmitt

56:55

56:55Multimodal Generative AI Demystified

Ekaterina Sirazitdinova

27:23

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

57:52

57:52Develop AI-powered Applications with OpenAI Embeddings and Azure Search

Rainer Stropek

58:00

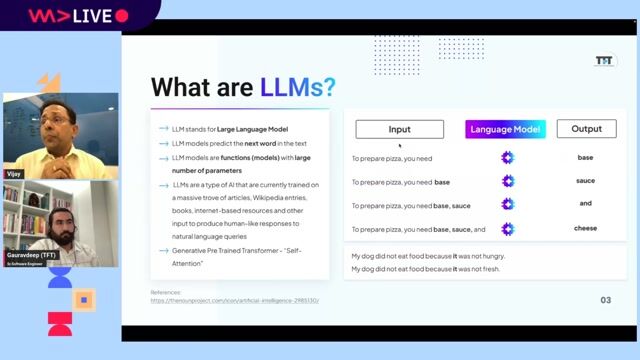

58:00Creating Industry ready solutions with LLM Models

Vijay Krishan Gupta & Gauravdeep Singh Lotey

59:39

59:39Building Products in the era of GenAI

Julian Joseph

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Forschungszentrum Jülich GmbH

Jülich, Germany

Intermediate

Senior

Linux

Docker

AI Frameworks

Machine Learning

Wilken GmbH

Ulm, Germany

Senior

Kubernetes

AI Frameworks

GitHub Copilot

Anthropic Claude

Cloud (AWS/Google/Azure)

Tetra Tech, Inc.

Newcastle upon Tyne, United Kingdom

Remote

Data analysis

The Rolewe

Charing Cross, United Kingdom

API

Python

Machine Learning

DeepL

London

Contract

Published: 9 hours ago

Competitive

Charing Cross, United Kingdom

Remote

Redis

Python

Grafana

FastAPI

+6

Eindhoven University of Technology

Eindhoven, Netherlands

Remote

React

Plotly

Next.js

Machine Learning